SoftVideo: Improving the Learning Experience of Software Tutorial Videos with Collective Interaction Data

IUI 2022

Jisu Yim

KAIST

Aitolkyn Baigutanova

KAIST

Many people rely on tutorial videos when learning to perform tasks using complex software. Watching the video for instructions and applying them to target software requires frequent going back-and-forth between the two, which incurs cognitive overhead. Furthermore, users need to constantly compare the two to see if they are following correctly, as they are prone to missing out on subtle differences. We propose SoftVideo, a prototype system that helps users plan ahead before watching each step in tutorial videos and provides feedback and help to users on their progress. SoftVideo is powered by collective interaction data, as experiences of previous learners with the same goal can provide insights into how they learned from the tutorial. By identifying the difficulty and relatedness of each step from the interaction logs, SoftVideo provides information on each step such as its estimated difficulty, lets users know if they completed or missed a step, and suggests tips such as relevant steps when it detects users struggling. To enable such a data-driven system, we collected and analyzed video interaction logs and the associated Photoshop usage logs for two tutorial videos from 120 users. We then defined six metrics that portray the difficulty of each step, including the time taken to complete a step and the number of pauses in a step, which were also used to detect users’ struggling moments by comparing their progress to the collected data. To investigate the feasibility and usefulness of SoftVideo, we ran a user study with 30 participants where they performed a Photoshop task by following along a tutorial video with SoftVideo. Results show that participants could proactively and effectively plan their pauses and playback speed, and adjust their concentration level. They were also able to identify and recover from errors with the help SoftVideo provides.

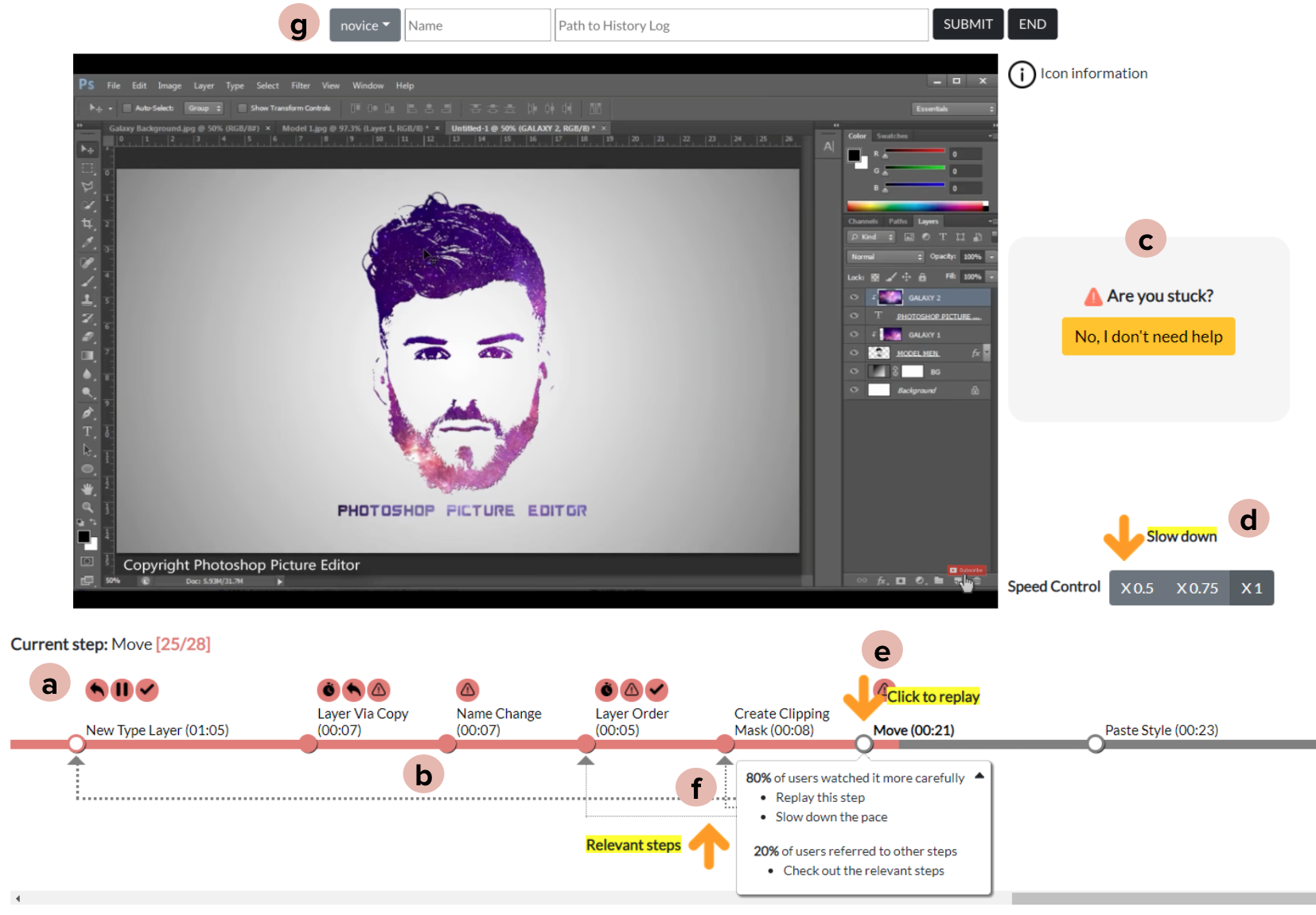

SoftVideo provides step information, gives feedback to learners on their progress, and provides help to overcome confusing moments.

Along with the software tutorial video, SoftVideo provides (a) a timeline where users can see the action name, its length, and the estimated difficulty. (b) Users can receive real-time feedback on their progress. If a user followed a step, the circle will be filled. (c) SoftVideo detects users’ confusing moments. Once detected, it provides users with suggestions such as (d) slowing down the pace, (e) replaying the step, or (f) seeing relevant steps. Users see customized information based on (g) the expertise level they enter.

To build SoftVideo, we leverage previous learners who had watched the same tutorial and worked toward the same end goal. We chose Adobe Photoshop as an instance of the software and collected 120 complete interaction logs with two tutorial videos with 74 participants. Our data analysis pipeline then analyzed the collected data to 1) estimate the difficulty of each step and 2) identify the relevancy of each step by analyzing how users behaved on each step.

We first defined six difficulty-related measures measures and computed them for each step. Then, we identified measures that exceed the third quartile of all steps. For each step, those measures are shown as icons on the timeline.

| Icons | Meanings | Measures |

|---|---|---|

| Users spent more time in this step compared to other steps. | Execution Time Index | |

| Users watched this step repeatedly more than other steps. | Repetition Time Index | |

| Users did backward jumps frequently at this step more than other steps. | Backjump Frequency | |

| Users paused frequently at this step more than other steps | Pause Frequency | |

| There are relatively many users who missed the step. | Miss Rate | |

| There are relatively many users who followed again the step. | Re-follow Rate |

Additionally, for the measures that could be computed per user (i.e., Execution Time Index, Repetition Time Index, Backjump Frequency, and Pause Frequency), we computed the third quartile of each measure within a step among users in the same group (i.e., Novice or Experienced). We set them as thresholds to identify if a user is having difficulty.

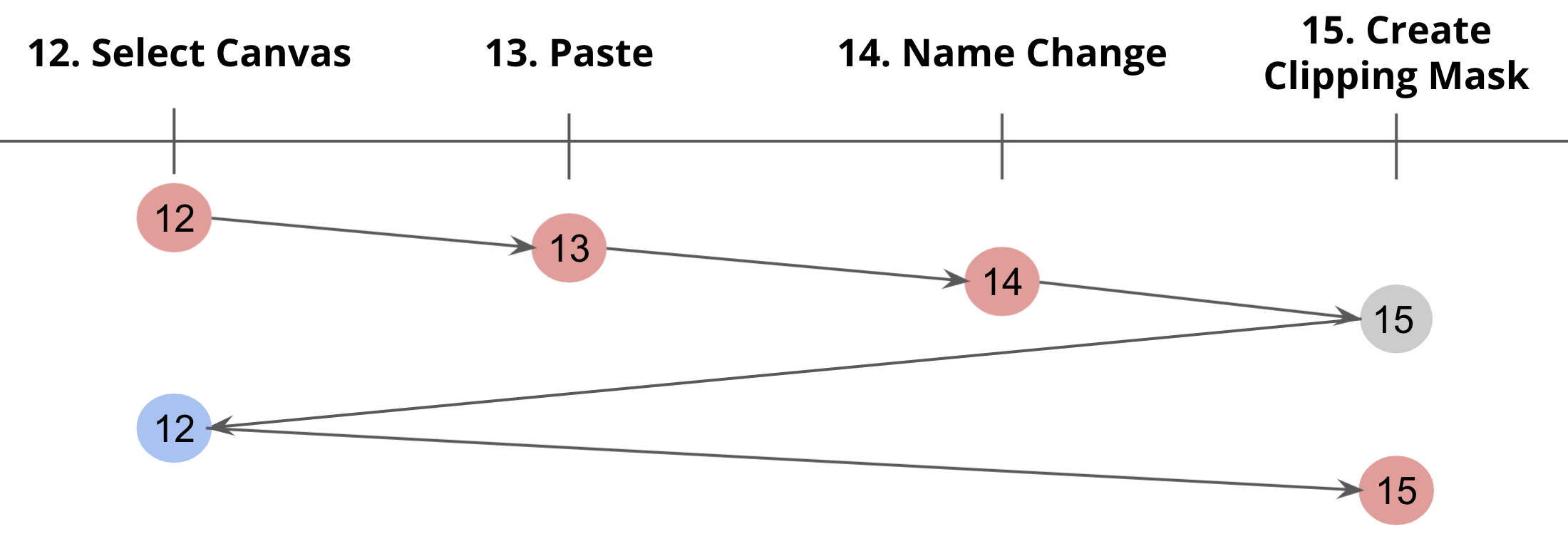

We defined three measures about relevancy of each step. Relevant step information can help learners who get stuck in a certain step, by suggesting they check other related steps again. To help learners decide whether they should check the relevant steps, we also defined Referring Rate and Continued Rate.

The figure above shows an example scenario where the relevant step of step 15 is step 12. After a user followed the step 12, 13, and 14, he is now on step 15. However, the user was not able to complete it. The user jumped back to step 12 and then followed it again. Then, he came back to step 15 and followed the step. (red: followed, gray: watched but not followed, blue: followed again).

Participants were able to proactively and effectively plan their pauses and playback speed, and vary their concentration level before watching a step by looking at the presented step information. The difficulty visualization also made them feel relieved when they encountered confusing moments. They were also able to identify and recover from errors with the help SoftVideo provided. Relevant step information helped them overcome confusing moments and acquire contextual Photoshop knowledge.

P22: “I put my fingers on the space bar in advance when facing difficult steps so that I can be ready to pause.”

P27: “felt relieved to see many icons when I was struggling because I knew it was not only me and the problem is the step itself.”

P7: “I thought I followed the step Move but it didn’t appear to be so, so I checked it again. I realized that I didn’t press ‘Ctrl’ while doing the action.”

P25: “It helped me a lot in understanding how to use Photoshop in general. I was able to know which actions are related and which should be done for other actions to be done.”

We release the dataset of 120 interaction logs across two tutorial videos and Photoshop in use. Each interaction log is composed of video interactions (i.e., pause, play, jump) in synchronization with Photoshop usages (i.e., actions performed in the software).

If you have any questions about the dataset, please contact the first author.

@inproceedings{yang2022softvideo,

author = {Yang, Saelyne and Yim, Jisu and Baigutanova, Aitolkyn and Kim, Seoyoung and Chang, Minsuk and Kim, Juho},

title = {SoftVideo: Improving the Learning Experience of Software Tutorial Videos with Collective Interaction Data},

year = {2022},

isbn = {9781450391443},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3490099.3511106},

doi = {10.1145/3490099.3511106},

abstract = {Many people rely on tutorial videos when learning to perform tasks using complex software. Watching the video for instructions and applying them to target software requires frequent going back-and-forth between the two, which incurs cognitive overhead. Furthermore, users need to constantly compare the two to see if they are following correctly, as they are prone to missing out on subtle differences. We propose SoftVideo, a prototype system that helps users plan ahead before watching each step in tutorial videos and provides feedback and help to users on their progress. SoftVideo is powered by collective interaction data, as experiences of previous learners with the same goal can provide insights into how they learned from the tutorial. By identifying the difficulty and relatedness of each step from the interaction logs, SoftVideo provides information on each step such as its estimated difficulty, lets users know if they completed or missed a step, and suggests tips such as relevant steps when it detects users struggling. To enable such a data-driven system, we collected and analyzed video interaction logs and the associated Photoshop usage logs for two tutorial videos from 120 users. We then defined six metrics that portray the difficulty of each step, including the time taken to complete a step and the number of pauses in a step, which were also used to detect users’ struggling moments by comparing their progress to the collected data. To investigate the feasibility and usefulness of SoftVideo, we ran a user study with 30 participants where they performed a Photoshop task by following along a tutorial video with SoftVideo. Results show that participants could proactively and effectively plan their pauses and playback speed, and adjust their concentration level. They were also able to identify and recover from errors with the help SoftVideo provides. },

booktitle = {27th International Conference on Intelligent User Interfaces},

pages = {646–660},

numpages = {15},

keywords = {video interaction, software tutorial videos, interaction log analysis, data-driven interface},

location = {Helsinki, Finland},

series = {IUI '22}

}